Hadoop is an Apache open source JAVA FRAMEWORK (set of classes & interface that implements commonly reusable data structures) that supports processing large data in distributed computing environment. It always runs on the cluster of commodity machines.

This means that physical data is stored at various locations and is linked via networks and distributed file system. Data doesn’t reside on a single monolithic server where the SQL engine is applied to crunch it. The whole concept is based on redundancy which deals with huge data coming from different sources.

Traditional enterprise databases are based on a single monolithic machine. If the volume of data increases, then they need to upgrade the machine with the faster processor or more memory. This is called vertical scaling which is very expensive in terms of hardware cost, this also trade-off scalability.

Hadoop architecture is built to support huge volume of data with dense computing and hence scalability is one of the important parameters while dealing with Hadoop ecosystem. It runs on the cluster of commodity server which is low-specification servers. If you need to add more data and increase scalability, add more low-specification machines to the cluster. This is called horizontal scaling.

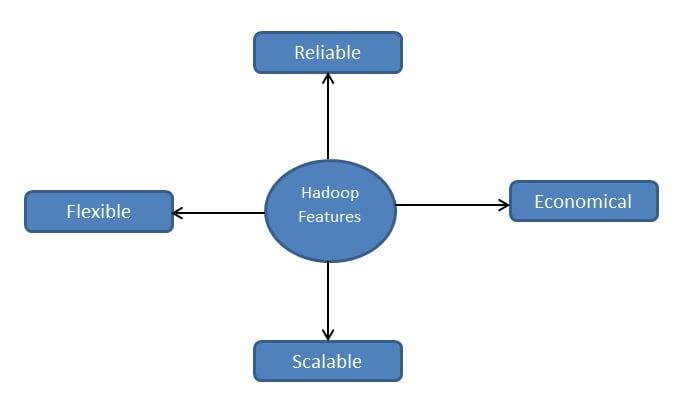

Key features of Hadoop

The image below shows some of the key features of Hadoop. We will explain each of these features in details below:

[image_frame url=”http://rcvacademy.com/wp-content/uploads/2016/11/hadoop-features.jpg” border_style=”boxed-frame-hover” action=”open-lightbox”]

1. Flexibility

Hadoop brings flexibility in terms of data processing. Handling unstructured and semi-structured data is one of the biggest challenges in today’s world. According to various reports published worldwide only 20% of data in an organization is structured while as rest 80% are either unstructured or semi-structured.

Hadoop eco-system gives you the flexibility to handle and analyze all types of data for decision-making systems.

2. Scalability

We all know that Hadoop is an open-source platform. Therefore, new nodes in the cluster can easily be added as and when data volume will grow without altering anything in the existing systems or programs. This makes Hadoop eco-system extremely scalable.

3. Reliable

In Hadoop, data gets replicated at two or more than two commodity machines depending on parameters we set in Hadoop eco-system. Therefore, even if one of the commodity machine crashes, data will be available on other commodity machines. This brings the whole eco-system fault tolerance. This means even if one node goes down, the system automatically relocates the work to another node where data is replicated.

4. Economical

The massive utilization of parallel computing resulted in substantial decrease in cost per terabytes of data for storage and processing. The cost of per terabyte of data is approximate $1000 according to some analyst in Hadoop eco-system.