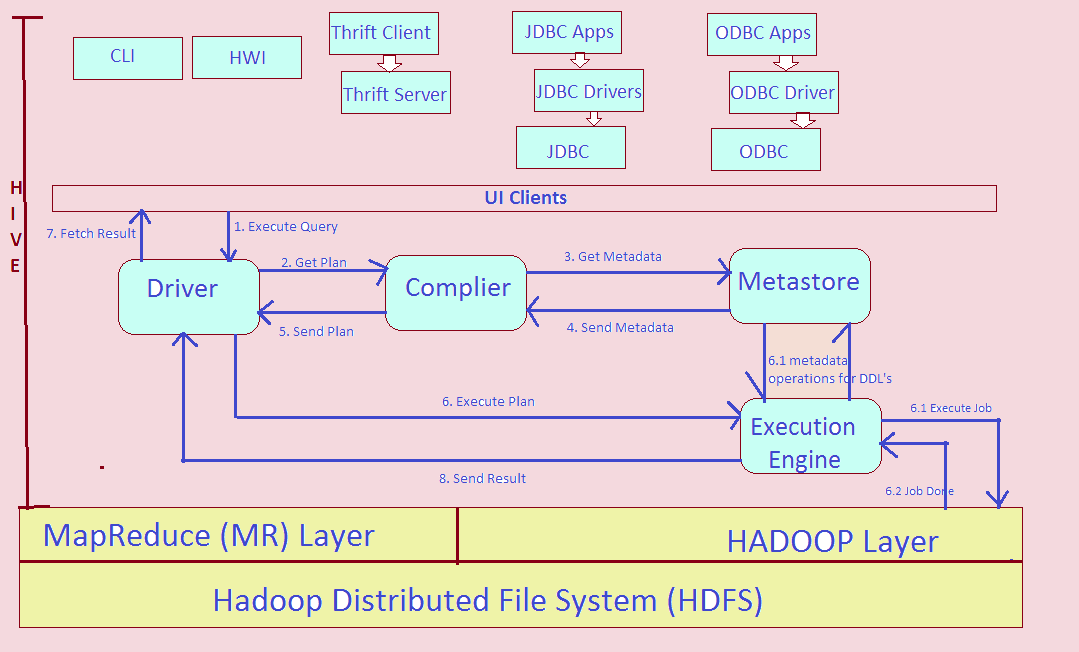

The below diagram represents Hadoop Hive Architecture and typical query that flows through the HIVE system.

The UI calls the execute query interface to the driver. The driver creates a session handle for the query and sends the query to the compiler to generate an execution plan.

The compiler needs the metadata to send a request for “getMetaData” and receives the “sendMetaData” request from metastore.

This metadata does the typecheck of the query expression and prunes the partitions based on query predicates.

The plan generated by the compiler is a sequence of steps where each step is either a MapReduce job, a metadata operation or an operation on HDFS.

The execution engine submits these stages to appropriate components (steps 6, 6.1, 6.2 and 6.3). Once the output is generated it is written to a temporary HDFS file through serializer.

The content of the file is read by execution engine directly from HDFS and displayed to UI clients.